Last week an incredible paper got released showing how neural networks could be used to separate a “style” from an image, and apply that style to another image. It’s a great read, even if some of the math goes over your head, and I encourage you to take a look.

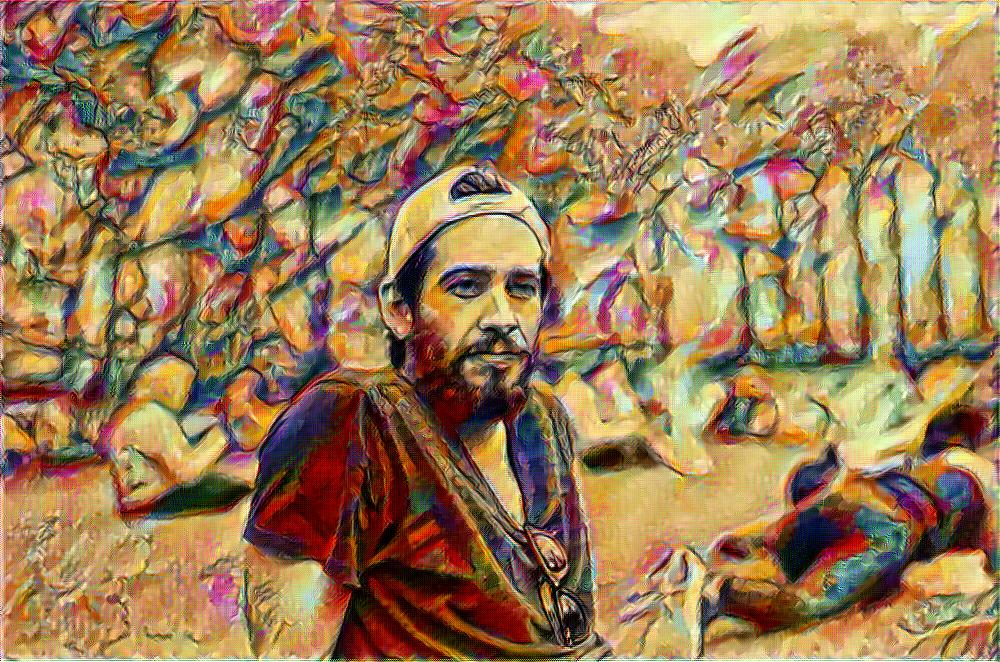

Their basic breakthrough is that these neural networks, which have gained heavy use from companies like Google, Amazon, and Facebook, are learning to see what actual content looks like in images. By separating the neural layers which are used to distinguish textures in the images, the algorithm can see a “style” or texture, independent of the greater shape of the content image.

Today, Kai Sheng Tai released a Torch implementation of that paper. It’s different in a few aspects, the largest of which is it uses Google’s Inception network, rather than the paper’s (newer) VGG-19. Kai’s code also seems to get the best results when starting from your input image, rather than noise as in the paper. Kai’s code is also beautifully written, well commented, and easy to read. Please check it out.

(Thanks for the work Kai!)

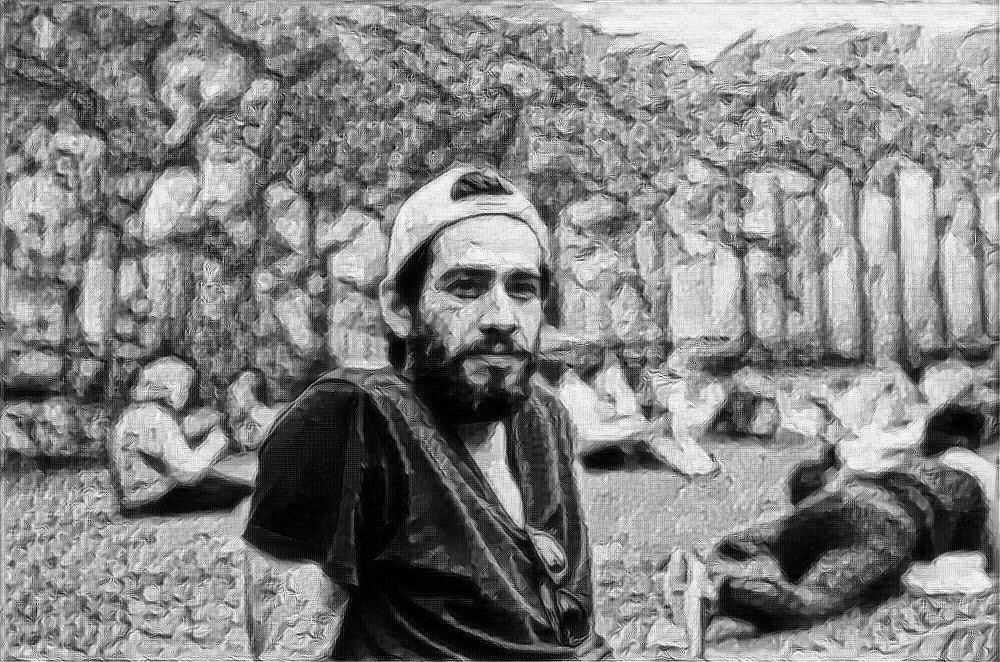

The rest of these images were generated on my GTX 980 Ti, under Ubuntu 15.04, with cunn. Each image takes about 60 seconds to generate. You can change the image resolution in images.lua

Enjoy!