TLDR: I turned a Teensy LC into a plant controlled MIDI robot bongo. I used the capacitive touch sensors built into the Teensy along with some guitar shielding copper stuck in plant’s soil to trigger MIDI clips in Ableton Live and jam along with a robot bongo.

I don’t know how it ended up being this way, I made a plant controlled robot bongo. I suppose if you graphed out my life, from being 12 years old and first installing Slackware 2.0 to now, it would be obvious that this would be the sort of thing I’d end up doing. But that doesn’t make it any less weird to me.

Meeting Robotic Instruments

Last month I took a class at the NYC Resistor taught by Troy Rogers called Making Music with Robotic Instruments. In under three hours we build a completely new MIDI powered robot using rotary solenoids and found objects to play music.

Troy used an Arduino ATMEL clone with a MIDI shield to get MIDI in to trigger voltage on the solenoids. By changing the number of microseconds you let the voltage through on a MOSFET, you can make different levels of loudness on your strikes.

We used Ableton Live and some other robotic instruments Troy made beforehand to make this:

Getting Solenoids Working

Once I saw everything working I knew I needed to build my own robot to play music. But building the mounts, buying the multiple power supplies necessary (Troy had I believe, a 12V 24V and 48V power supply), buying the (expensive!) cymbals and snares, it all added up to a lot of money and effort just to try something.

So instead, I settled for ordering a few solenoids, MOSFETs, and diodes from Adafruit. I had an old Raspberry Pi laying around, and figured I could skip the MIDI and make an OSC server on the Raspberry Pi to play the drums instead. Also, I later realized I still had a matching 24V power supply left over that I used for an earlier LED project.

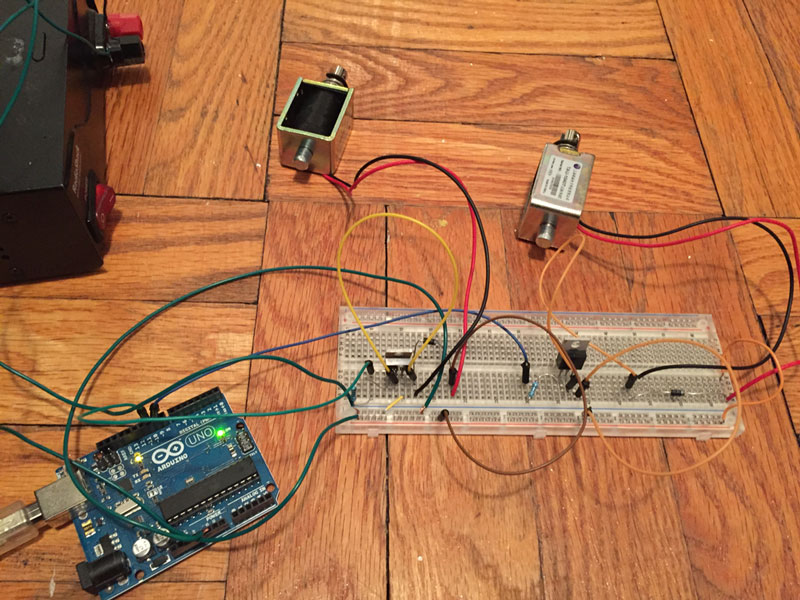

The circuit for triggering solenoids is super simple from any IO pin. You just put a resistor going in to the MOSFET’s left side, and then ground and voltage plus both get a diode between them on the remaining two legs of the MOSFET. See below:

In the process of building this, I realized I needed to have something to strike, and something to mount the solenoids to. I ended up taking my neighbor’s old dish rack they threw away, and repurposing it to a mount for some bongos and a couple plastic cowbells. This part ended up actually being the most difficult part of the project, figuring out how to mount and get everything lined up and playing interestingly with just a dish rack.

Making a Raspberry Pi Robot

So, once I built the dish rack and got the solenoids mounted, I needed a way to get Ableton Live to start sending OSC messages to the server on the Raspberry Pi. I wrote a quick Python script to read the local MIDI device (Macs can create virtual MIDI instruments natively, see here) and convert it to an OSC message to be sent over the network.

Once I got that part working, I could use my MIDI keyboard, have it send to the virtual MIDI port, the Python script would read that, fire it off to the Raspberry Pi, and voila, the solenoid would get triggered.

The code for this is in the Github repo under the drumsOnPi directory. To send the OSC and convert your local MIDI virtual instrument to OSC use the Python file named sendOSC.py in the root directory.

The Devil is in the Latency

But with music and computers especially, there is always one evil lurking behind everything. Latency. During the day when nobody was at home and on their wifi (I live in densely populated Brooklyn), the robot drums worked perfectly. But at night, latency could be all over the place, and the drums would not be able to keep rhythm in any sort of acceptable way.

Accidental Luck, Thanks Teensy

Luckily, I accidentally fried the Raspberry Pi while moving it. Like a moron, I let one of the live wires go where it shouldn’t and the Raspberry Pi smoked up and died.

This happened right around the time that the Raspberry Pi 2 came out, and so I got on to Adafruit again, and took this time to get another micro controller for my Moog werkstatt, called the Teensy LC.

My original plan was to use the Teensy LC’s analog voltage pin as a CV input to my werkstatt.

But the Teensy LC ended up being a much more impressive little micro controller than I’d anticipated, and I ended up using it to power both my werkstatt, my capacitive touch plants, and my robot bongos. It did all of this without even breaking a sweat or slowing down a bit.

MIDI Native and Capacitive Touch?!

Incredibly, the Teensy LC is capable of being a native MIDI device, so all I had to do was plug it into my computer, and it shows up as just another MIDI instrument, while I can reprogram it and debug from Arduino at the same time.

This is insane. The guy behind the Teensy, Paul Stoffregen deserves a medal for all the amazing work he’s done. The Teensy platform is by far the best micro controller I’ve ever worked with. Seriously, go buy one now, Teensy LCs are under $12 at his site.

Once the Teensy was triggering the solenoids as a MIDI instrument, it was time to get touching plants converted into MIDI.

Converting Plants Into Instruments

The first step is building the wires that go into the plants. This is just normal strand wire, stripped a bunch at the end, with some guitar shield copper tape wound around the stripped part at the end. Stick it into the soil, and then connect the other wire to one of the Teensy’s Touch Read pins.

I wrote a program to log out all the capacitance values of the plants to get a base level idea of what the values were. This is hardcoded in the source, and if you end up trying it out, it will probably be the first thing you’ll need to change. (Side note, DO NOT USE the TouchSense library with the Teensy LC. Instead, use the built in touchRead() function. It takes a pin and returns the capacitance. The TouchSense library won’t compile for the Teensy.)

Touch sensing in the plants is by far the most buggy part of the code. When it’s been a couple days and the plant’s soil has dried up, the capacitance of the plants can change dramatically. So probably don’t hardcode those values like I did. Instead look for a massive change in values. You’ll see this and get a feel for it by looking at the serial values returned while touching your plant in real time.

Put an LED on It!

The final piece was adding some light feedback for when you touched the plants. With these sorts of projects, it’s rough not knowing which part is broken, and having visual feedback that the microcontroller is working, and recognized your touch is a big help for debugging.

I had an Arduino Uno with a DMX shield laying around from an earlier project that I repurposed for this. I basically set four Digital IO pins to input mode, and then connected them to the Teensy. By default, all the Teensy pins connected are set to LOW, and they go to HIGH when a plant is touched, along with sending a MIDI message to the computer.

Programming DMX lighting is interesting. The DMX shield provides a function, and it lets you set a channel to a value between 0 and 255. On my LED strips, channels 1 – 3 are RED, GREEN, and BLUE on my first strip, and 4 – 5 are the same on the second strip. So to fade up to white you simply do a for loop from 0-255 with a delay for channel 1, 2, and 3.

Show me the code!

It’s on Github. Enjoy.